The global digital economy is currently navigating a structural transformation that parallels the industrial revolutions of the past, moving from a foundation of manual and digital labor toward a future defined by autonomous computational intelligence. At the epicenter of this shift is the concept of AAAS—a multifaceted term that represents the convergence of Analytics-as-a-Service, Agent-as-a-Service, and the overarching framework of Artificial Intelligence-as-a-Service (AIaaS). For two decades, Software-as-a-Service (SaaS) defined the relationship between enterprises and technology, focusing on the delivery of standardized tools designed to augment human work processes. However, as 2026 approaches, the industry is transitioning from software as a static tool to software as an active, reasoning agent. This report examines the technical, economic, and operational dimensions of AAAS, providing a comprehensive roadmap for professional peers navigating the transition toward an intelligence-native enterprise.

The Taxonomy of Cloud Intelligence Models

Understanding AAAS requires a rigorous baseline of the broader cloud ecosystem. Historically, cloud computing was categorized into three primary service models: Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS). These models represent different tiers of management responsibility and architectural control.

IaaS provides the virtualized fundamental resources—compute, storage, and networking—allowing organizations to build custom environments from the ground up. It offers the highest level of control but imposes a significant technical burden, requiring internal teams to manage operating systems, runtimes, and middleware. In contrast, PaaS simplifies the development lifecycle by providing a managed platform where developers focus exclusively on application code and data management, leaving the underlying infrastructure to the provider. SaaS completes this hierarchy by delivering fully functional, cloud-hosted applications directly to end-users via the web, eliminating the need for installation or ongoing maintenance by the customer.

The emergence of AIaaS (Artificial Intelligence as a Service) has introduced a critical fourth layer. AIaaS provides pre-trained models and APIs that allow organizations to integrate sophisticated intelligence—such as natural language processing (NLP), computer vision, and speech-to-text—without the prohibitive costs of training foundational models or managing specialized hardware like high-end GPUs. AAAS, in its various forms, represents the operationalized manifestation of these intelligence layers within the business environment.

Comparative Analysis of Cloud Management Responsibilities

The following table illustrates the shift in ownership and control across the cloud stack, highlighting where the “As-a-Service” provider assumes responsibility.

| Component | On-Premises | IaaS | PaaS | SaaS | AIaaS/AAAS |

| Physical Hardware | User | Provider | Provider | Provider | Provider |

| Virtualization | User | Provider | Provider | Provider | Provider |

| Operating System | User | User | Provider | Provider | Provider |

| Middleware/Runtime | User | User | Provider | Provider | Provider |

| Application Logic | User | User | User | Provider | Provider |

| Data Management | User | User | User | User | Shared/Provider |

| Model Inference | User | User | User | Provider | Provider |

Deconstructing the AAAS Acronym: Analytics, Agents, and Authentication

In the contemporary technical discourse, AAAS functions as a polysemous acronym. Its meaning is context-dependent, referring to different specialized domains that are increasingly unified under the umbrella of generative AI and autonomous systems.

Analytics-as-a-Service (AaaS)

Analytics-as-a-Service refers to the cloud-based delivery of data analysis tools and platforms designed to extract insights from enterprise datasets. Historically, this model focused on descriptive analytics—summarizing historical trends via dashboards and reports. However, as the data landscape shifts, AaaS is evolving into “Agentic Analytics,” where AI systems independently plan, execute, and verify entire analytical workflows. This evolution moves the user experience from manual data exploration toward a state where the software proactively surfaces anomalies and identifies causal relationships without human prompting.

Agent-as-a-Service (AaaS)

Agent-as-a-Service represents the most significant paradigm shift in 2026. This model characterizes the delivery of “digital workers”—autonomous AI agents capable of reasoning, making decisions, and executing tasks across multiple systems without continuous human direction. Unlike traditional automation, which relies on rigid scripts, AI Agent as a Service functions as a dynamic workforce that learns, adapts, and delivers specific outcomes rather than just providing a tool for human input. Reports suggest that organizations adopting Agent-as-a-Service can reduce operational costs by an average of 35% while increasing overall productivity by 40%.

Authentication-as-a-Service (AaaS)

While often treated as a subset of cybersecurity, Authentication-as-a-Service (or Identity-as-a-Service) is critical for the AAAS ecosystem. It provides cloud-based control over permissions used to access networks, models, and data. In an environment where AI agents autonomously interact with sensitive corporate data, robust, AI-enhanced identity management is essential to prevent unauthorized access and ensure that agents operate within their designated safety parameters.

The Market Landscape of AIaaS: 2025–2026 Analysis

The market for Artificial Intelligence as a Service is entering a phase of explosive growth, driven by the mass adoption of generative AI and the transition from pilot projects to full-scale production deployments. The global AIaaS market was valued at approximately USD 20.64 billion in 2025 and is projected to reach USD 126.08 billion by 2031, with a compound annual growth rate (CAGR) of 35.20% during the forecast period.

Market Revenue and Growth Drivers

The surge in market valuation is supported by several key factors. First, the subscription-based model of AIaaS has significantly lowered the total cost of ownership (TCO) for small and medium-sized enterprises (SMEs), allowing them to access high-end machine learning capabilities without massive upfront capital expenditure. Second, the increasing demand for industry-specific AI solutions—particularly in highly regulated sectors like banking, financial services, and insurance (BFSI) and healthcare—is fueling the development of verticalized AIaaS offerings.

| Market Metric | 2025 Estimate | 2031/2034 Forecast | CAGR |

| Global AIaaS Market Size | USD 20.64B | USD 126.08B (2031) | 35.20% |

| U.S. AIaaS Market Size | USD 4.78B | USD 54.04B (2033) | 35.44% |

| Public Cloud Revenue Share | 77.35% | — | — |

| SME Growth Rate | — | — | 35.1% – 36.8% |

Regionally, North America dominated the market in 2025 with a share of approximately 36-37%, attributed to its advanced technological infrastructure and early adoption of AI across its corporate sector. However, the Asia-Pacific region is anticipated to register the fastest CAGR (approx. 38.7%) through 2034, driven by heavy investments in AI infrastructure by nations like China, India, and Singapore.

The Hyperscaler Ecosystem: AWS, Azure, and Google Cloud

The delivery of AIaaS and the broader AAAS ecosystem is dominated by three primary cloud service providers (CSPs): Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Each provider has developed a unique strategic focus, tailoring their AI services to specific enterprise needs.

Amazon Web Services (AWS)

AWS remains the market leader by offering the most extensive range of AI/ML-specific tools through its Amazon SageMaker platform. SageMaker simplifies the entire machine learning lifecycle, from data preparation to model deployment. AWS’s flagship AIaaS offerings include Amazon Rekognition for computer vision, Amazon Comprehend for natural language processing, and Amazon Polly for text-to-speech. AWS is preferred by organizations that require deep modularity and granular control, although it is often noted for having a steeper learning curve compared to its rivals.

Microsoft Azure

Azure is positioned as the most enterprise-friendly platform, particularly for organizations already integrated into the Microsoft software stack. Through its exclusive partnership with OpenAI, Azure provides the Azure OpenAI Service, allowing enterprises to leverage models like GPT-4 within a secure, compliant environment. Azure AI Speech and Cognitive Services offer pre-built APIs for developers, while Azure Machine Learning Studio provides a low-code/no-code interface suitable for less technical users.

Google Cloud Platform (GCP)

Google Cloud is recognized for its excellence in AI-heavy and data analytics workloads. Its Vertex AI platform offers a unified environment for building and deploying ML models, benefiting from Google’s native expertise in search and natural language understanding. GCP is distinguished by its use of specialized hardware, such as Cloud TPUs, which are optimized for training large-scale models efficiently. GCP is often cited as the leader in simplicity and user-friendly AutoML capabilities.

Performance and Infrastructure Benchmarks (2025-2026)

Technical decisions regarding cloud providers are increasingly influenced by performance metrics like latency and resource provisioning speed.

| Task / Metric | AWS | Azure | Google Cloud |

| VM Spin-Up Time | ~35s | ~30s | ~25s |

| API Response Latency | ~100ms | ~90ms | ~70ms |

| Static Content Delivery | ~60ms | ~50ms | ~40ms |

| GPU Access (High-end) | NVIDIA H100 | NVIDIA-powered VMs | Cloud TPUs / GPUs |

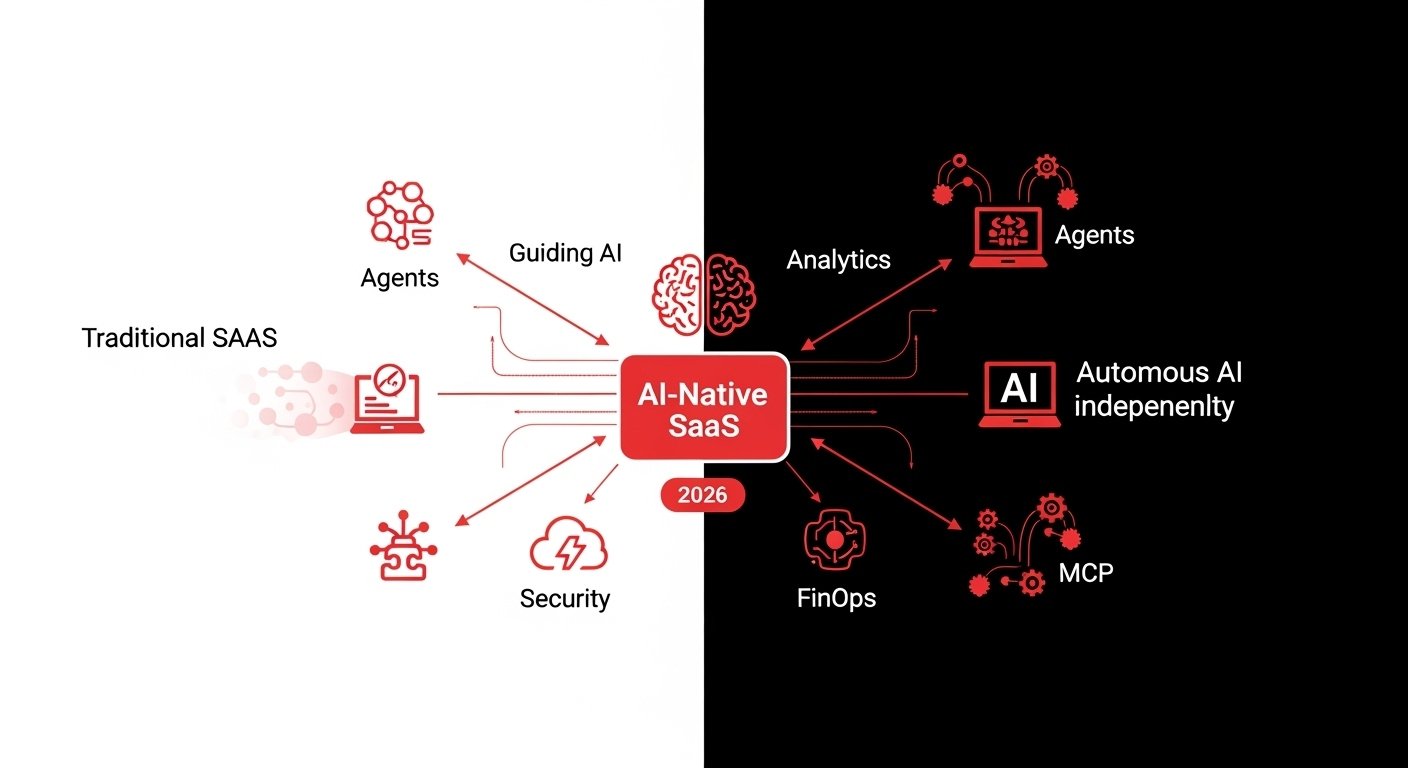

The 2026 Paradigm Shift: AI-Native SaaS and the Model Context Protocol

The transition from 2025 to 2026 marks a pivotal inflection in the SaaS industry. The era of “AI as a feature”—where generative AI capabilities were simply bolted onto existing platforms—is being replaced by “AI-native SaaS”.

Predictions for the 2026 SaaS Ecosystem

Research indicates that by late 2026, the traditional logic of the SaaS industry—streamlining human work and charging per user—will become largely invalid. Instead, software will replace labor, with AI applications directly delivering results rather than just providing the tools to achieve them.

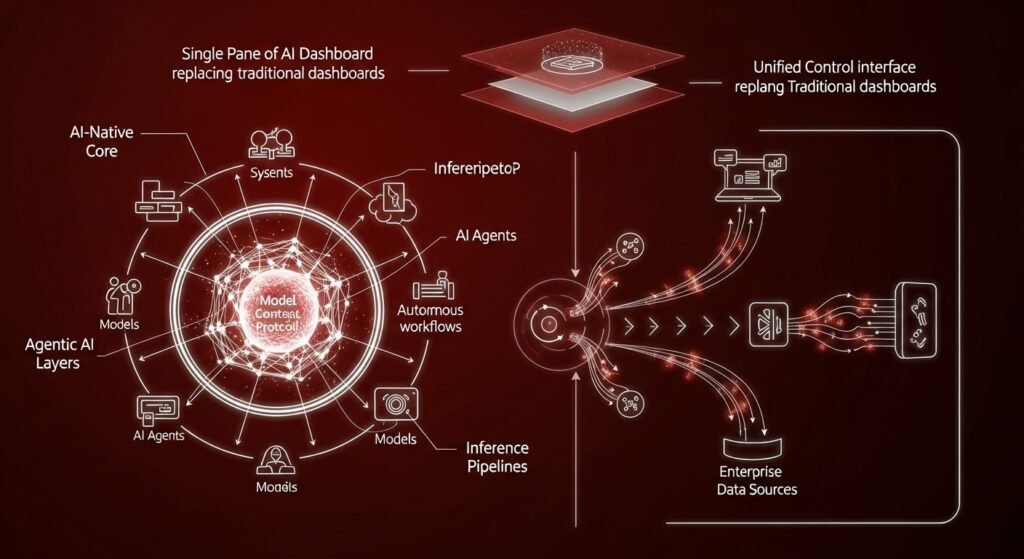

One of the most transformative technical developments in this period is the standard adoption of the Model Context Protocol (MCP). MCP serves as the new integration layer, moving away from fragmented REST APIs toward a unified canonical bridge that connects Large Language Models (LLMs) to internal enterprise data sources. This allows AI agents to maintain consistent context across multiple software tools without over-permissioning, enabling a “Single Pane of AI” that replaces the traditional “Single Pane of Glass” dashboard.

Architectural Transformation of SaaS

Traditional SaaS was database-centric, focusing on structured data and CRUD (Create, Read, Update, Delete) principles. In 2026, AI-native platforms prioritize computational intelligence and autonomy. These systems are architected around foundation models and inference pipelines from “day zero,” ensuring that intelligence is not an attachment but the core foundational logic of the application.

Small Language Models (SLMs): The Rise of Efficient Intelligence

While Large Language Models (LLMs) have historically dominated the discourse, 2026 is increasingly becoming the year of Small Language Models (SLMs). SLMs, typically defined as models with fewer than 10 billion parameters, are proving to be more economical and suitable for many enterprise-specific agentic applications.

Benefits of SLMs for Enterprise AAAS

SLMs provide several strategic advantages that make them ideal for specialized digital work:

- Reduced Operational Costs: SLMs require significantly less computational power and energy to operate, making them a cost-effective choice for high-volume, repetitive tasks.

- Faster Inference and Lower Latency: Due to their streamlined architecture, SLMs can generate responses more quickly than LLMs, which is critical for real-time applications like customer service chatbots or in-app assistants.

- Enhanced Data Privacy: Their small size allows them to be deployed locally on edge devices or mobile hardware, enabling on-device data processing that never touches the cloud. This is particularly valuable for the healthcare and finance sectors, which operate under stringent data residency laws.

- Lower Propensity for Hallucination: Because SLMs are often trained on narrower, higher-quality, domain-specific datasets, they are frequently more accurate and less prone to “hallucinations” than broad, general-purpose LLMs.

Heterogeneous Agentic Systems

The future of AAAS architecture involves heterogeneous systems where SLMs act as the primary workforce for repetitive sub-tasks, while LLMs are invoked selectively as “strategic orchestrators” for complex reasoning or open-domain dialogue. This modular approach optimizes the balance between performance and cost.

Sector-Specific Impact and Use Cases

The application of AAAS is driving measurable returns across various industry verticals, with the most significant impact seen in sectors with high administrative burdens or complex regulatory requirements.

Retail and Consumer Transformation

In the retail sector, agentic AI is redefining both storefront and back-office operations. A prominent example is “ADAM,” a context-aware robot developed through a collaboration between Richtech and Microsoft Azure AI. ADAM utilizes vision models and natural language processing to serve as a conversational assistant, adjusting its behavior based on variables like weather, time of day, and foot traffic. Beyond beverage service, retail agents are being deployed to guide customers to products, detect misplaced items on shelves, and provide voice-enabled in-aisle assistance—all while reducing the manual tracking burden on store managers.

Financial Services and Debt Collection

The shift from “software as a tool” to “software as labor” is vividly illustrated in the debt collection industry. Traditionally, SaaS companies sold management software to collection agencies. However, newer AI-native firms like Salient are directly replacing debt collectors with AI agents. These AI collectors are proficient in 21 languages, familiar with the complex legal provisions of all 50 U.S. states, and maintain consistent emotional stability. The result is not just a reduction in labor costs but a 50% increase in debt recovery rates, demonstrating that customers are increasingly willing to pay for outcomes rather than just tool access.

Healthcare and Diagnostics

In healthcare, AAAS is facilitating the transition toward predictive maintenance for medical equipment and hyper-personalized patient care. AI agents handle administrative tasks like appointment scheduling and follow-ups, while SLMs analyze patient data directly on mobile devices to provide real-time health insights while maintaining strict PII (Personally Identifiable Information) compliance.

Security Challenges and Risk Mitigation in the Agentic Era

As AI agents gain autonomy, the attack surface for enterprises shifts from binary code to human language and intent. 2026 has marked the end of the AI “hype” cycle and its solidification as a systemic attack vector.

Top AI Security Risks

Traditional security measures like SQL injection scanning are insufficient for protecting against language-based manipulation. The top risks in 2026 include:

- Direct Prompt Injection: Attackers craft inputs designed to override the system’s core instructions, potentially forcing the model to reveal internal MFA codes or proprietary business logic.

- Indirect Prompt Injection: Adversaries hide malicious commands within external data, such as websites or PDF resumes. When an AI agent processes this data, it executes the hidden command—such as exfiltrating the user’s browser history or credentials.

- Adversarial Prompt Chaining: A strategy where attackers use multiple turns of conversation to gradually “jailbreak” the model’s safety guardrails.

- Shadow Prompting/AI: The risk of employees using unmanaged personal AI accounts for corporate tasks, leading to the leakage of sensitive product roadmaps or financial data into public model training sets.

| Risk Category | Consequences | Risk Level |

| Prompt Injection | Manipulation of outputs; unauthorized actions | High |

| Data Exfiltration | Breach of PII, credentials, or IP; GDPR violations | Critical |

| Model Theft | Loss of proprietary algorithms and competitive edge | High |

| Supply Chain Poisoning | Use of malicious datasets or third-party model backdoors | Medium |

Practical Security Best Practices

To mitigate these risks, enterprises are adopting “AI-SPM” (AI Security Posture Management) frameworks. Key defensive strategies include:

- Prompt Firewalls: Implementing real-time validation and filtering layers that block malicious prompts before they reach the inference engine.

- Continuous Red Teaming: Regularly simulating attacks on LLMs to identify vulnerabilities in the model’s response boundaries.

- Least Privilege Access: Treating AI agents as high-value identities and applying strict “least privilege” policies to their system access rights.

- Runtime Behavior Monitoring: Establishing behavioral baselines for agents and triggering automated alerts when an agent deviates from its intended logic.

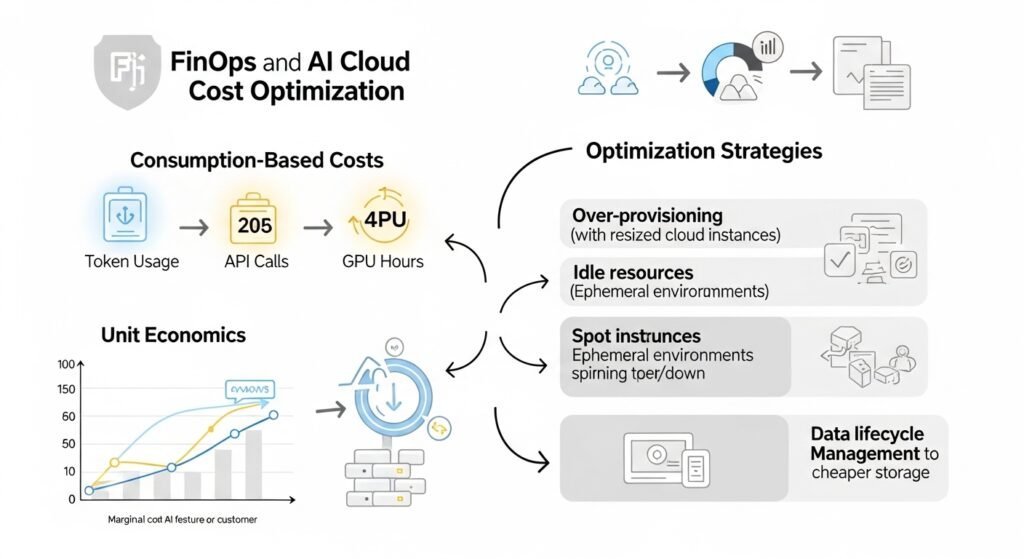

FinOps and Cloud Cost Optimization

The economic model of AAAS is characterized by consumption-based pricing, where costs are tied to token usage, API calls, and GPU hours. Without rigorous management, these “unpredictable” costs can quickly erode the ROI of AI initiatives.

The Shift to Unit Economics

In 2026, cloud cost optimization has moved from periodic reviews to continuous control loops. Enterprises are increasingly focused on “AI unit economics”—measuring the marginal cost of serving one more customer or running one more AI-powered feature.

Optimization Strategies for 2026

Research highlights several common “cost drains” and their corresponding solutions:

- Over-Provisioning: Teams often run instances 50-100% larger than necessary. Rightsizing involves analyzing actual CPU and memory utilization to match resource allocation precisely to demand.

- Idle Resources: Development environments often run 24/7 despite being used only 8 hours a day. Implementing “ephemeral environments” that spin up on demand and shut down when idle can cut infrastructure costs by up to 70-80%.

- Spot Instances: For fault-tolerant workloads like ML model training or batch processing, using “Spot Instances” (unused cloud capacity) can provide discounts of 50-90% compared to on-demand pricing.

- Data Lifecycle Management: High storage costs for logs and old data can be reduced by 50-80% through automated policies that move older data to cheaper storage tiers or “cold” archives.

Ethical Governance and Regulatory Compliance

The adoption of AAAS is no longer just a technical challenge but a legal and ethical one. In February 2026, several key global regulations, including parts of the EU AI Act and Colorado’s AI Act, go into effect, regulating the deployment of “high-risk” AI systems.

Core Ethical Principles for AAAS

Organizations are encouraged to align their AI deployments with the UNESCO Recommendation on the Ethics of AI, which outlines ten core principles :

- Transparency and Explainability: Professionals must be able to explain, in non-technical terms, how an AI reached a critical decision.

- Human Oversight: There is no “set it and forget it” with autonomous systems; human-in-the-loop (HITL) mechanisms are required to ensure decisions align with human values and laws.

- Fairness and Non-Discrimination: AI models must be rigorously tested to prevent biases related to race, gender, or socioeconomic status in areas like hiring or financial services.

- Environmental Responsibility: Organizations must consider the high energy consumption of large-scale AI and prioritize efficient, sustainable practices, such as using SLMs where possible.

The Role of the CISO and Board

Governance must be “active, not passive”. CISOs are advised to maintain a comprehensive inventory of every AI model, dataset, and third-party integration in the enterprise environment—”you cannot govern what you do not see”. Boards are increasingly judging brands based on the “relational intelligence” and trustworthiness of their agents rather than traditional corporate identity markers.

Conclusion: Orchestrating the Agentic Future

The convergence of AI and SaaS into the AAAS paradigm represents a definitive end to the era of software as a passive repository of data. By late 2026, the global digital ecosystem will be defined by the “Agentic Enterprise”—an organization where speed, precision, and adaptability are the primary currencies of success.

Strategic success in this new age requires a dual focus: technical modernization and human-centric governance. Organizations must move beyond experimentation, addressing the “AI value gap” by building integrated platforms that unify data, context, and action. The winners of 2026 and beyond will be those who transition from managing tools to orchestrating digital workers, leveraging the modular power of SLMs and LLMs while maintaining a robust, ethical, and cost-optimized foundation. The future is not a destination but a state of continuous, autonomous labor; for the proactive enterprise, the revolution has already begun.

FAQ’S

AAAS refers to Analytics-as-a-Service, Agent-as-a-Service, and Artificial Intelligence-as-a-Service (AIaaS). It represents cloud-delivered intelligence that analyzes data, executes tasks autonomously, and integrates AI models into enterprise workflows.

Unlike traditional SaaS, which delivers static tools, AIaaS provides autonomous, reasoning agents that can perform tasks, make decisions, and adapt workflows, moving software from a tool to an active workforce.

AI-native SaaS is built from the ground up around AI, with intelligence as its core. Unlike AI-enhanced platforms that add AI features onto legacy systems, AI-native solutions rely on inference pipelines and agentic systems as foundational components.

MCP is a unified integration layer connecting AI models (like LLMs or SLMs) to enterprise data sources. It ensures consistent context across tools, enabling autonomous agents to operate safely and effectively.

SLMs (<10B parameters) are faster, cheaper, and privacy-friendly compared to large models. They excel in specialized tasks, reduce latency, and can run locally on edge devices, making them ideal for enterprise AAAS applications.